'Swarms of killer robots': Former Biden official says US military is afraid of using AI

A former Biden administration official working on cyber policy says the United States military would have a problem controlling its soldiers' use of artificial intelligence.

Mieke Eoyang, the deputy assistant secretary of defense for cyber policy during the Joe Biden administration, said that current AI models are poorly suited for use in the U.S. military and would be dangerous if implemented.

'There are any number of things that you might be worried about.'

With claims of "AI psychosis" and killer robots, Eoyang said the military cannot simply use an existing, public AI agent and morph it into use for the military. This would of course involve giving a chatbot leeway on suggesting the use of violence, or even killing a target.

Allowing for such capabilities is cause for alarm in the Department of Defense, now Department of War, Eoyang claimed.

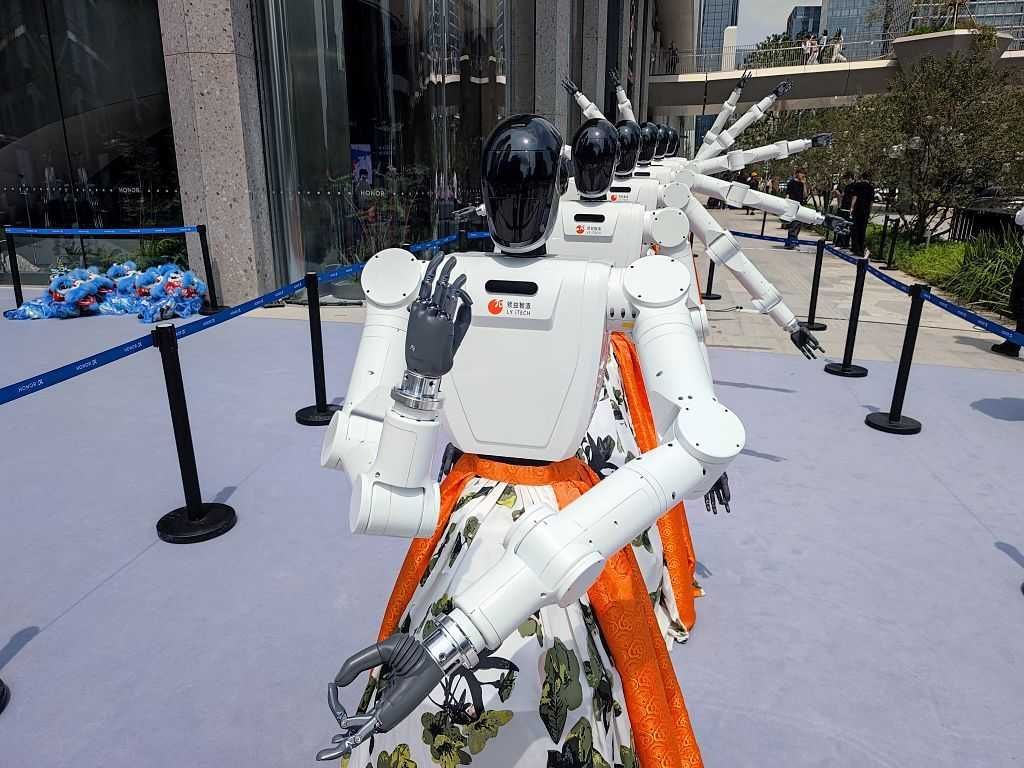

"A lot of the conversations around AI guardrails have been, how do we ensure that the Pentagon's use of AI does not result in overkill? There are concerns about 'swarms of AI killer robots,' and those worries are about the ways the military protects us," she told Politico.

"But there are also concerns about the Pentagon's use of AI that are about the protection of the Pentagon itself. Because in an organization as large as the military, there are going to be some people who engage in prohibited behavior. When an individual inside the system engages in that prohibited behavior, the consequences can be quite severe, and I'm not even talking about things that involve weapons, but things that might involve leaks."

Perhaps unbeknownst to Eoyang, the Department of War is already working on the development of an internal AI system.

RELATED: War Department contractor warns China is way ahead, and 'we don't know how they're doing it'

According to EdgeRunner CEO Tyler Saltsman, not only is the Department of War not afraid of AI, but it's "all about it."

Saltsman just wrapped up a test run with the Department of War during military exercises in Fort Carson, Colorado, and Fort Riley, Kansas. He recently told Blaze News about his offline chatbot, EdgeRunner AI, which is modernizing the delivery of information to on-the-ground troops.

"The Department of War is trying to fortify what their AI strategy looks like; they're not afraid of it," Saltsman told Blaze News in response to Eoyang's claims.

He added, "It's concerning that folks who are clueless on technology were put in such highly influential positions."

In her interview, Eoyang — a former MSNBC contributor — also raised concerns about operational security and that "malicious actors" could get "their hands on" AI tools used by the U.S. military.

"There are any number of things that you might be worried about. There's information loss; there's compromise that could lead to other, more serious consequences," she said.

RELATED: 'They want to spy on you': Military tech CEO explains why AI companies don't want you going offline

These valid concerns were seemingly put to bed by Saltsman when he previously revealed to Blaze News that EdgeRunner AI would remain completely offline.

The entrepreneur even advocated for publicly available AI models to offer an offline version that users can pay for and keep. Alternatives, he explained, "want your data, they want your prompts, they want to learn more about you."

"They want to spy on you," he added.

Saltsman recently announced a partnership with Mark Zuckerberg's Meta that will see the technology shared with military allies across the world.

"It's important for the government to partner with industry and academia and have joint-force operations in this field," he told Blaze News. "I'm thankful for Secretary of War Pete Hegseth and all he is doing to reshape the DOW and help it become more effective."

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!

Photo by Smith Collection/Gado/Getty Images

Photo by Smith Collection/Gado/Getty Images Photographer: Laura Proctor/Bloomberg via Getty Images

Photographer: Laura Proctor/Bloomberg via Getty Images