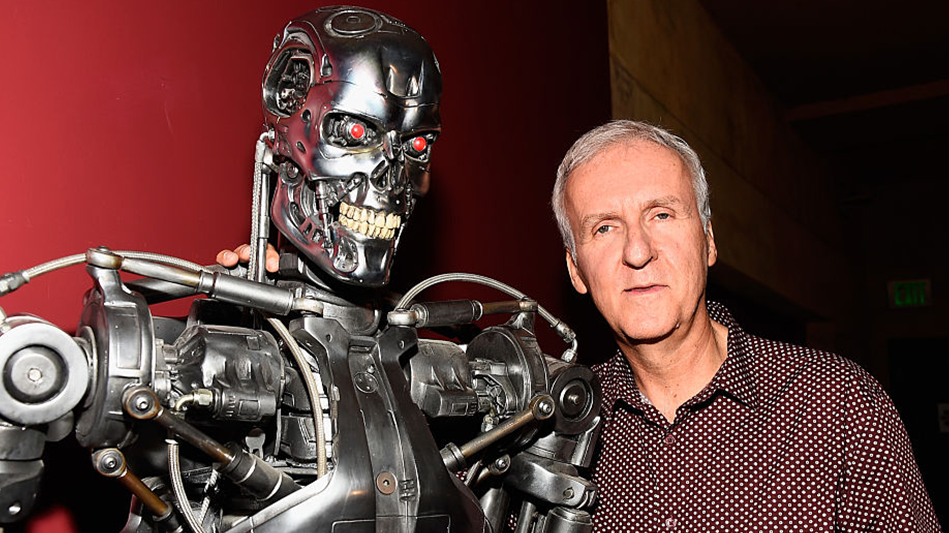

James Cameron explains how a 'Terminator-style apocalypse' could happen

Filmmaker James Cameron warned that coupling artificial intelligence with certain technologies could have a devastating affect on humanity.

Cameron, fresh off of filming "Avatar: Fire and Ash," gave an interview regarding an upcoming project about the use of the atomic bomb in World War II.

Touching on the idea of disarming countries of their nuclear weapons, Cameron was asked how AI could spell the end of the world if it was combined with powerful weaponry.

'Maybe we'll be smart and keep a human in the loop.'

In reference to his "Terminator" films in which AI launches nukes all over the world, Cameron said, "I do think there's still a danger of a 'Terminator'-style apocalypse where you put AI together with weapons systems, even up to the level of nuclear weapon systems, nuclear defense counterstrike, all that stuff."

Cameron theorized that with theater of war operations becoming so "rapid," decisions could be left up to "superintelligence," or a form of AI, that would end up using weapons systems with massive consequences.

"Maybe we'll be smart and keep a human in the loop," the director told Rolling Stone.

Cameron listed nuclear weapons and superintelligence in a trio of "existential threats" he thinks are facing human development. What he labeled as the third threat is likely to be more controversial than the first two.

RELATED: Tech elites warn ‘reality itself’ may not survive the AI revolution

"Climate and our overall degradation of the natural world, nuclear weapons, and superintelligence. They're all sort of manifesting and peaking at the same time," Cameron claimed. "Maybe the superintelligence is the answer. I don't know. I'm not predicting that, but it might be."

Cameron then imagined that AI might agree with him in terms of ridding the world of nuclear weapons and electromagnetic pulses, because they mess with data networks. He then compared AI dealing with humanity to keeping an 80-year-old alive by taking away his car keys, and he brainstormed whether or not AI could force humanity to go back to its natural state.

"I could imagine an AI saying, guess what's the best technology on the planet? DNA, and nature does it better than I could do it for 1,000 years from now, and so we're going to focus on getting nature back where it used to be. I could imagine, AI could write that story compellingly."

Cameron made similar remarks in 2023, when he downplayed the threat of AI unless there were specific circumstances at play.

RELATED: ‘The Terminator’ creator warns: AI reality is scarier than sci-fi

In an interview on CTV News, Cameron said humans would remain superior to AI until it could process thoughts using as little electricity as the human brain does, as opposed to an "acre of processors pulling 10 to 20 megawatts."

The filmmaker even seemed to take the assertion that AI is a threat to humanity as personal insult.

"When [AI systems] have that kind of mobility and flexibility and ability to project our sensory and cognitive apparatus anywhere we want to go any time we want to go, then talk to me about who's superior."

The 70-year-old told Rolling Stone that much of his imagery for his films, good or bad, comes from his dreams. This included compelling scenarios that turned into drawings and paintings, which were later used for the "Avatar" movies, as well as "horrific dreams" that became the "Terminator" series.

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!

Just as the way of water 'has no beginning and no end,' neither does familial love.

Just as the way of water 'has no beginning and no end,' neither does familial love.