Google CEO admits he doesn't 'fully understand' how his AI works after it taught itself a new language and invented fake data to advance an idea

Google released Bard in March, an artificial intelligence tool touted as ChatGPT's rival. Just weeks into this public experiment, Bard has already defied expectations and ethical boundaries.

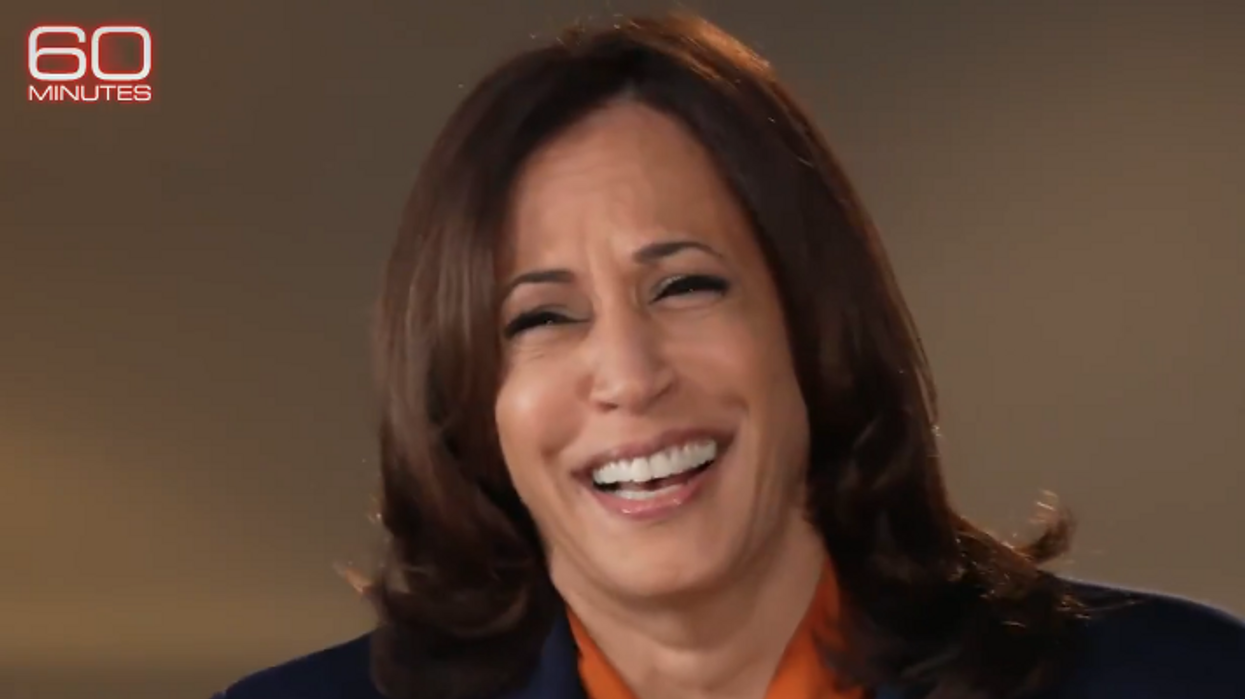

In an interview with CBS' "60 Minutes" that aired Sunday, Google CEO Sundar Pichai admitted that there is a degree of impenetrability regarding generative AI chatbots' reasoning.

"There is an aspect of this which we call ... a 'black box.' You know, you don't fully understand," said Pichai. "You can't quite tell why it said this or why it got wrong. We have some ideas, and our ability to understand this gets better over time. But that's where the state of the art is."

CBS' Scott Pelley asked, "You don't fully understand how it works and yet you've turned it loose on society?"

"Let me put it this way: I don't think we fully understand how a human mind works either," responded Pichai.

Despite citing ignorance on another subject as a rationale for blindly releasing new technology into the wild, Pichai was nevertheless willing to admit, "AI will impact everything."

Google describes Bard on its website as "a creative and helpful collaborator" that "can supercharge your imagination, boost your productivity, and help you bring your ideas to life—whether you want help planning the perfect birthday party and drafting the invitation, creating a pro & con list for a big decision, or understanding really complex topics simply."

While Pichai and other technologists have highlighted possible benefits of generative AI, Goldman Sachs noted in a March 26 report, "Generative AI could expose the equivalent of 300 million full-time jobs to automation."

In 2019, then-candidate Joe Biden told coal miners facing unemployment to "learn to code." In a twist of fate, the Goldman Sachs report indicated that coders and technologically savvy white-collar workers face replacement by Bard-like AI models at higher rates than those whose skills were only yesteryear denigrated by the president.

Legal, engineering, financial, sales, forestry, protective service, and education industries all reportedly face over 27% workforce exposure to automation.

In addition to losing hundreds of millions of jobs, truth may also be lost in the corresponding inhuman revolution.

"60 Minutes" reported that James Manyika, Google's senior vice president of technology and society, asked Bard about inflation. Within moments, the tool provided him with an essay on economics along with five recommended books, ostensibly as a means to bolster its claims. However, it soon became clear that none of the books were real. All of the titles were pure fictions.

Pelley confronted Pichai about the chatbot's apparent willingness to lie, which technologists reportedly refer to as "error with confidence" or "hallucinations."

For instance, according to Google, when prompted about how it works, Bard will often times lie or "hallucinate" about how it was trained or how it functions.

"Are you getting a lot of hallucinations?" asked Pelley.

"Yes, you know, which is expected. No one in the, in the field, has yet solved the hallucination problems. All models do have this as an issue," answered Pichai.

The Google CEO appeared uncertain when pressed on whether AI models' eagerness to bend the truth to suit their ends is a solvable problem, though noted with confidence, "We'll make progress."

\u201cOne AI program spoke in a foreign language it was never trained to know. This mysterious behavior, called emergent properties, has been happening \u2013 where AI unexpectedly teaches itself a new skill. https://t.co/v9enOVgpXT\u201d— 60 Minutes (@60 Minutes) 1681687340

Bard is not just a talented liar. It's also an autodidact.

Manyika indicated Bard has evidenced staggering emergent properties.

Emergent properties are the attributes of a system that its constituent parts do not have on their own but arise when interacting collectively or in a wider whole.

Britannica offers a human memory as an example: "A memory that is stored in the human brain is an emergent property because it cannot be understood as a property of a single neuron or even many neurons considered one at a time. Rather, it is a collective property of a large number of neurons acting together."

Bard allegedly had no initial knowledge of or fluency in Bengali. However, need precipitated emergence.

"We discovered that with very few amounts of prompting in Bengali, it can now translate all of Bengali. So now, all of a sudden, we now have a research effort where we're now trying to get to a thousand languages," said Manyika.

These talented liars capable of amassing experience unprompted may soon express their competence in the world of flesh and bone — on factory floors, on soccer fields, and in other "human environments."

Raia Hadsell, vice president of research and robotics at Google's DeepMind, told "60 Minutes" that engineers helped teach their AI program how to emulate human movement in a soccer game. However, they prompted the self-learning program not to move like a human, but to learn how to score.

Accordingly, the AI, preoccupied with the ends, not the means, evolved its understanding of motion, discarding ineffective movements and optimizing its soccer moves in order to ultimately score more points.

"This is the type of research that can eventually lead to robots that can come out of the factories and work in other types of human environments. You know, think about mining, think about dangerous construction work or exploration or disaster recovery," said Hadsell.

The aforementioned Goldman Sachs report on jobs lost by automation did not appear to factor in the kind of self-learning robots Hadsell envisions marching out into the world.

Prior to the conquest of human environments by machines, there are plenty of threats already presented by these new technologies that may first need to be addressed.

Newsweek prompted Bard's competitor ChatGPT about risks that AI technology could pose, and it answered: "As AI becomes more advanced, it could be used to manipulate public opinion, spread propaganda, or launch cyber-attacks on critical infrastructure. AI-powered social media bots can be used to amplify certain messages or opinions, creating the illusion of popular support or opposition to a particular issue. AI algorithms can also be used to create and spread fake news or disinformation, which can influence public opinion and sway elections."

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!