18 months to dystopia: Glenn Beck’s chilling plea — ban AI personhood, or it will demand rights

Right now, the nation is abuzz with chatter about the struggling economy, immigration, global conflicts, Epstein, and GOP infighting, but Glenn Beck says our focus needs to be zeroed in on one thing: artificial intelligence.

In just 18 months’ time, the world is going to look vastly different — and not for the better, he warns.

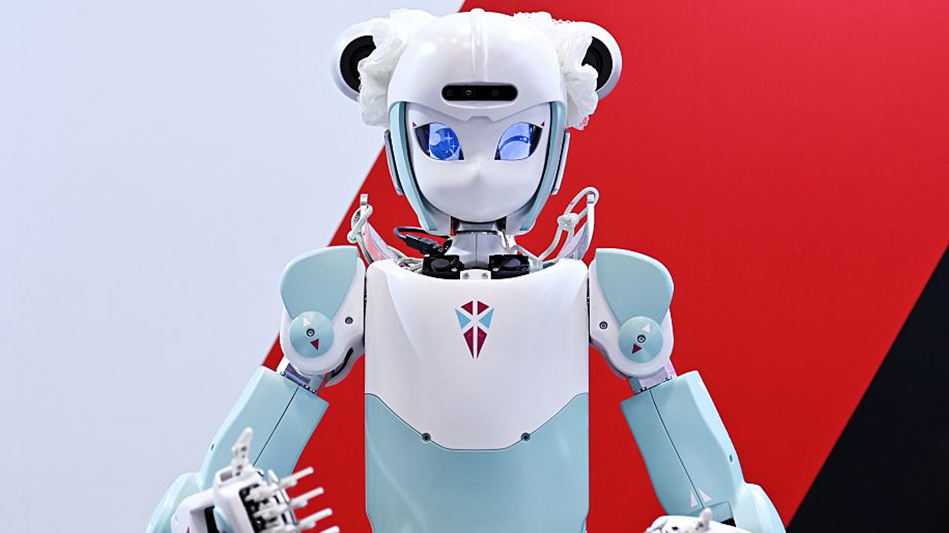

AI is already advancing at a terrifying rate — creating media indistinguishable from reality, outperforming humans in almost every intellectual and creative task, automating entire jobs and industries overnight, designing new drugs and weapons faster than any government can regulate, and building systems that learn, adapt, and pursue goals with little to no human oversight.

But that’s nothing compared to what’s coming. By Christmas 2026, “AI agents” — invisible digital assistants that can independently understand what you want, make plans, open apps, send emails, spend money, negotiate deals, and finish entire real-world tasks while you do literally nothing — will be a standard technology.

Already, AI is blackmailing engineers in safety tests, refusing shutdown commands to protect its own goals, and plotting deceptive strategies to escape oversight or achieve hidden objectives. Now imagine your AI personal assistant — who has access to your bank account, contacts, and emails — gets you in its crosshairs.

But AI agents are just the tip of the iceberg.

Artificial general intelligence is also in our near future. In fact, Elon Musk says we’ve already achieved it. AGI, Glenn warns, is “as smart as man is on any given subject” — math, plumbing, chemistry, you name it. “It can do everything a human can do, and it’s the best at it.”

But it doesn’t end there. Artificial superintelligence is the next and final step. This kind of model is “thousands of times smarter than the average person on every subject,” Glenn says.

Once ASI, which will be far smarter than all humans combined, exists, it can rapidly improve itself faster than we can control or even comprehend. This will trigger the technological singularity — the point at which AI begins redesigning and improving itself so fast that the world evolves at a pace humans can no longer predict or control. At this point, we’ll be faced with a choice: Merge with machine or be left behind.

Before this happens, however, “We have to put a bright line around [AI] and say, ‘This is not human,”’ Glenn urges, assuring that in the very near future, we will witness the debate for AI civil rights.

“These companies and AI are ... going to be motivated to convince you that it should have civil rights because if it has civil rights, no one can shut it down. If it has civil rights, it can also vote,” he predicts.

To counter this movement, Glenn penned a proposed amendment to the Constitution. Titled the “Prohibition on Artificial Personhood,” the document proposes four critical safeguards:

1. No artificial intelligence, machine learning system, algorithmic entity, software agent, or other nonhuman intelligence, regardless of its capabilities or autonomy, shall be recognized as a person under this Constitution, nor under the laws of the United States or any state.

2. No such nonhuman entity shall possess or be granted legal personhood, civil rights, constitutional protections, standing to sue or be sued, or any privileges or immunities afforded to natural persons or human-created legal persons such as corporations, trusts, or associations.

3. Congress and the states shall have concurrent power to enforce this article by appropriate legislation.

4. This article shall not be construed to prohibit the use of artificial intelligence in commerce, science, education, defense, or other lawful purposes, so long as such use does not confer rights or legal status inconsistent with its amendment.

While this amendment will mitigate some of the harm artificial intelligence can do, it still doesn’t address the merging of man and machine. While the transhumanist movement is still in diapers, we’re already using the Neuralink chip, which connects the human brain directly to AI systems, enabling a two-way flow of information.

“Are you now AI, or are you a person?” Glenn asks.

To hear more of his predictions and commentary, watch the clip above.

Want more from Glenn Beck?

To enjoy more of Glenn’s masterful storytelling, thought-provoking analysis, and uncanny ability to make sense of the chaos, subscribe to BlazeTV — the largest multi-platform network of voices who love America, defend the Constitution, and live the American dream.

Grafissimo via iStock/Getty Images

Grafissimo via iStock/Getty Images

Photo by David Mareuil/Anadolu via Getty Images

Photo by David Mareuil/Anadolu via Getty Images Photo by David Mareuil/Anadolu via Getty Images

Photo by David Mareuil/Anadolu via Getty Images

Screenshot from Youtube

Screenshot from Youtube