Bill Gates demands a new religion for humanity

It’s a mask-off moment. On the “Possible” podcast, co-hosted by LinkedIn co-founder Reid Hoffman, Bill Gates insisted humanity would need a new religion or philosophy to cope with the reality of AI and the technological conquest of the world.

In his final comment on the episode — which Hoffman calls a “tour de force” — Gates reflects at length on the spiritual situation he believes unbridled tech is coercing us into.

Maybe we want to focus on ensuring people aren’t led into the darkness of worshipping their machines or automating their religion?

“The potential positive path is so good that it will force us to rethink how should we use our time,” he says. “You know, you can almost call it a new religion or a new philosophy of, okay, how do we stay connected with each other, not addicted to these things that’ll make video games look like nothing in terms of the attractiveness of spending time on them.”

On the surface, Gates seems to be advancing a claim plenty of people can agree with — the idea that the coming virtual world will be so tempting to disappear into that only a deep source of spiritual authority will be enough to remind us that we’re still best off sharing life together as the human beings we are.

But it’s not just the virtual world he’s talking about. “So it’s fascinating that we will, the issues of, you know, disease and enough food or climate, if things go well, those will largely become solved problems. And, you know, so the next generation does get to say, ‘Okay, given that some things that were massively in shortage are now not, how do, how do we take advantage of that?’”

Here’s where things get tricky. You might have wondered already why Gates, if he feels so sure that we need cosmic protection against becoming cyber zombies, doesn’t immediately reach for a religion that already exists and flourishes — especially Christianity, which still dominates American faith identification and significant segments of public life.

Well, his assumption is that tech will make obsolete at least some of the words of Christ, such as “you have the poor with you always,” as in always there for you to help and serve. Now one might say that if physical sickness and hunger are “solved problems,” many might still (or especially) suffer from mental and spiritual illness and thirst. But even that logic is not what Gates is interested in. He’s more concerned about sports.

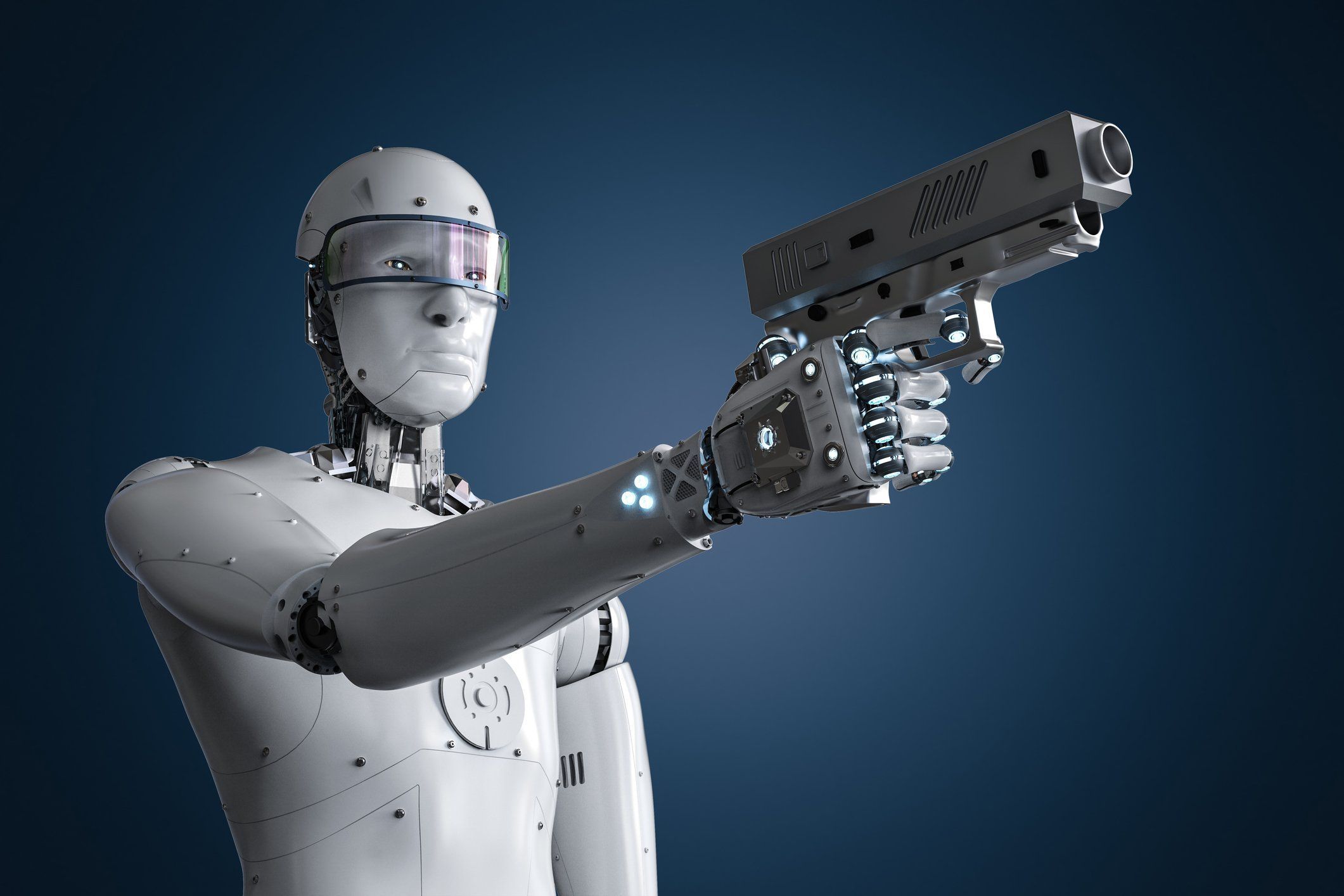

Yes, sports. “You know, do we ban AI being used in certain endeavors so that humans get some — you know, you know, like you don’t want robots playing baseball, probably,” he stammers. "Because they’re, they’ll be too good. So we’ll, we’ll keep them off the field. Okay. How broadly would you go with that?”

Maybe so “broadly” that we’d want to focus on ensuring people aren’t led into the darkness of worshipping their machines or automating their religion? Perhaps that’s something we need to do already, not after the machines and their self-appointed masters — no matter how well intentioned — drag us to a place where our given humanity is almost unrecognizable.

“We are so used to this shortage world that, you know, I, I, I hope I get to see how we start to rethink the, these deep meaning questions,” Gates concludes. But for all his ostensible futurism, he blinds himself to the present — where some tech-savvy Christians are carrying on the work of years in making plain that the tools we need to ensure that we don’t wipe ourselves out with awesome wonders are already at hand … because they are the same yesterday, today, and forever.