Goodbye, anons? Radical transparency is about to upend the internet

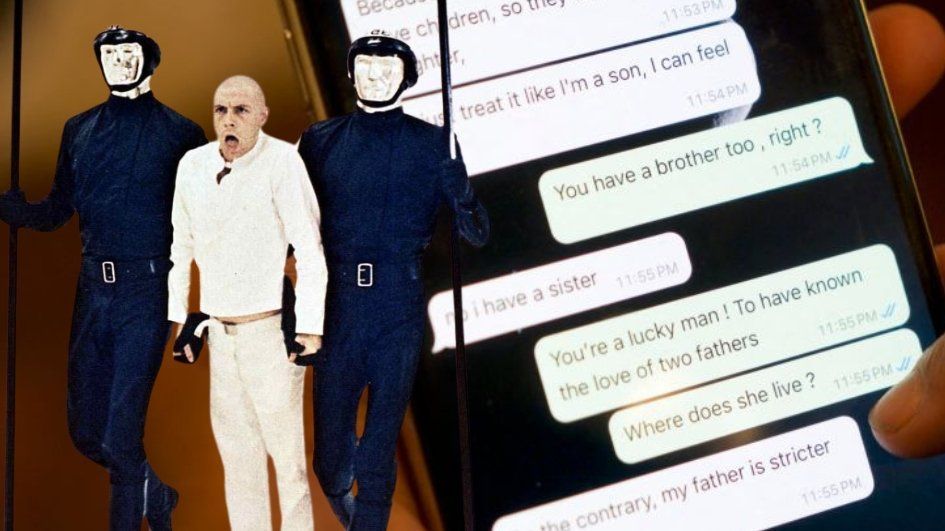

In June, Texas Patriot, a prominent anonymous account supportive of President Donald Trump, announced during the height of tensions with Iran:

F**k it. If Trump takes us to war, I’m done with him and his administration.

I voted for:

NO WARS

No taxes

Cheap gas

Cheap groceries

MAHA.

What of these things has actually happened?

I’m pissed.

This message from a popular pro-Trump account seemed significant. Was Trump’s populist base turning on him?

In our current world, however, where plausible fake engagement can be created at an almost limitless scale, true anons will lose a great deal of their power.

But shortly thereafter, Right Angle News, another popular anonymous account, asserted that Texas Patriot was actually based in Pakistan. Yet another popular anon account contested this, saying that Texas Patriot is really an American originally from Texas who now lives in Georgia. Notably, most other major accounts weighing in on the controversy, from Proud Elephant to Evil Texan, are themselves anonymous, adding further to the hall of mirrors.

Either way, Texas Patriot deleted its own account shortly thereafter, perhaps suggesting that he or she had something to hide — or at least didn’t want the scrutiny.

The question of whether Texas Patriot is, in fact, a patriot from Texas or a bad actor in Islamabad is ultimately beside the point. As Newsweek wrote of the incident:

Social media has proved useful for galvanizing the MAGA movement, with popular accounts often reacting to political developments from Trump’s feud with X owner Elon Musk to Trump’s policy agenda. If it emerged that an account alleged to be American was actually based in another country, it would impact users’ trust.

And such trust is rapidly eroding, which will accelerate as ever more sophisticated fake accounts and bot farms are exposed.

The incident was just one of many in which major social media accounts were discovered — or at least suggested — to be run by someone far different from who they were purported to be. And it previews a shift that is just now beginning, which will fundamentally change how we interact with social media content.

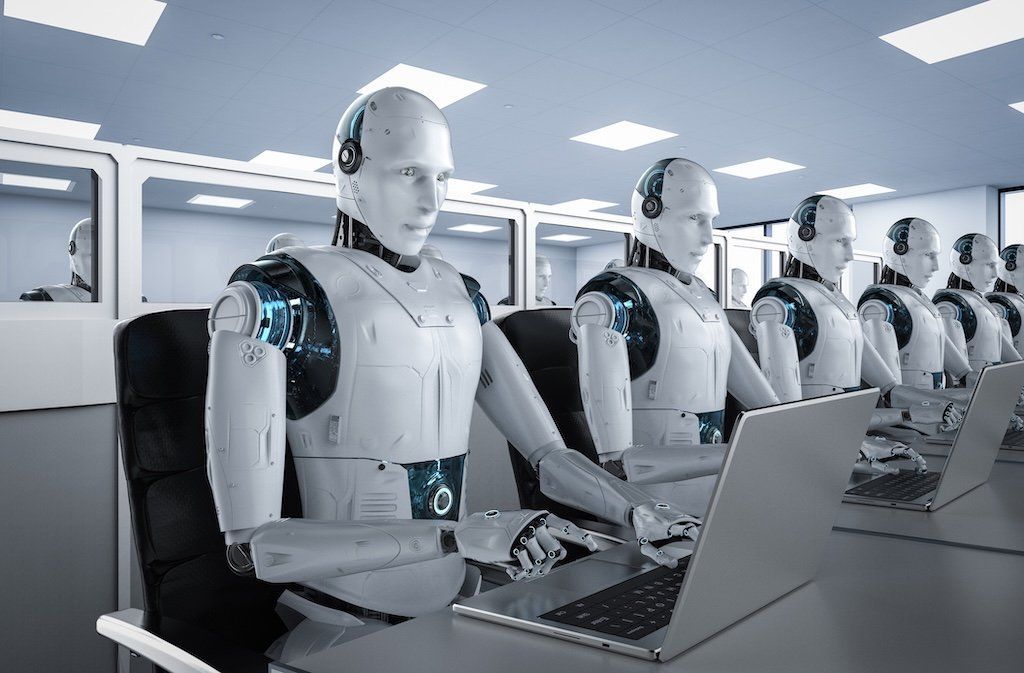

Bots indistinguishable from humans

When it comes to who will rule social media, the age of the anon is ending. The age of radical transparency is beginning — and yet, if designed well, radical transparency can still include a substantial and valuable space for a large degree of online anonymity.

Several reasons explain the shift. Increasingly sophisticated artificial intelligence models and bots generate outputs that, in many cases, are already almost indistinguishable from humans. For most users, they will soon become fully indistinguishable (a fact confirmed by multiple studies that have shown that most people have a poor ability to tell the difference between the two). And almost certainly, bots guided with even a minimum of human interaction will become indistinguishable from actual humans.

Many of my best friends have had anon accounts. A few are still prominent anons. It’s also noteworthy that almost every prominent ex-anon I know personally, whether doxxed or self-outed, dramatically improved their profile and professional opportunities once they were no longer anonymous.

I am not anti-anon, however. I understand why some people, especially those expressing opinions well outside of the mainstream, need to be anonymous. I also acknowledge that anonymity has been a crucial part of the American political tradition since the revolutionary era. An internet that banned anons would be an internet that is much poorer. This is why the biggest current anon accounts will be grandfathered into the coming system of radical transparency, as they have actual operators who are known to enough people that they are recognized as genuine.

I know several big anon accounts like this. I don’t know who is running them, but I have multiple offline friends I trust who do know the account holders and vouch for them. Accounts of this kind, with credible, real-world validation, will continue to have influence. But increasingly, new big anon accounts will be ignored, even if they amass a large number of followers (many of whom are fake).

As these ersatz accounts become increasingly sophisticated every day, engaging with the truly real becomes ever more important. Fake videos and photos proliferating on social media merely add to the potential for deception.

Age of radical transparency

Even accounts run by real people will not be immune to the age of radical transparency. Some are partially or wholly automated — a way for a “content creator” to maintain a cheap 24-hour revenue stream. In the future, if you want to have influence, mechanisms will be in place to prove not only that it is you who are posting but that you are posting content that is authentic, with a proven real-world point of origin. Some have even suggested using the blockchain as a method of validation.

There should be a simple way of blocking the worst AI slop accounts, foreign bad actors who post highly packaged clickbait, or those who shamelessly steal content made by others. Most Americans would probably prefer not to engage with unverified foreign accounts when discussing U.S. politics. Certainly, I would be willing to pay for a feed that only showed me real, verified accounts from America, along with a limited list of paid, verified, and non-anonymous accounts from other parts of the world.

I am interested in having discussions with real people about real content and the real opinions they have. I want accounts mercilessly downrated if they produce inauthentic content presented as real. I want accounts downrated that regularly retweet unverified slop. If X, or any other online platform, can’t consistently provide that, I’ll look elsewhere — and so will many others.

Anonymity breeds toxicity

My desire for authenticity is not a left-wing attempt to police “disinformation” — that is, whatever the left doesn’t want said. It’s far more serious. It’s not about getting “true” facts but a feed that is filled with actual people producing their own content representing their own views — with clear links to the sources for their claims.

Anonymity has, naturally, always been accompanied by a slew of problems: It can lead to echo chambers or aggressive exchanges, as users feel less pressure to engage rationally.

The lack of personal stakes can escalate conflict, which is amplified by AI. Modern AI can generate thousands of unique, human-like posts in seconds, overwhelming feeds with propaganda or fake news. The increasing influence of state actors in this fake news ecosystem makes it even riskier.

RELATED: Slop and spam, bots and scams: Can personalized algorithms fix the internet?

Anonymity also emboldens individuals to act without fear of repercussions, which often has downsides. The online disinhibition effect, a psychological phenomenon first described by psychologist John Suler in 2004, suggests that anonymity reduces social inhibitions, leading to behaviors individuals might avoid in face-to-face settings.

Everyone has met the toxic anon online personality who turns out to be quite meek and agreeable in person. One friend of mine who had an edgy online persona eventually closed her anon account (with tens of thousands of followers) and recreated her online presence from scratch as a “face” account. Her tweets are no longer as fun or spicy as they had been, but her persona is real — and presents who she really is. And she eventually landed a great public-facing job, partly based on the quality of her tweets.

Dwindling era of anon accounts

Anons could play a leading role in the old social media world where bots were mostly obvious, and meaningful provocations were, in large part, created by real people through anonymous accounts. In our current world, however, where plausible fake engagement can be created on an almost limitless scale, true anons will lose a great deal of their power. They will be replaced as top influencers by those who are willing to be radically transparent.

Truly transparent identities should include verifiable information, such as email addresses, phone numbers, or government-issued IDs for account creation. While such information does not need to be publicly shared, it should be given to the social media company connected to the account.

Raising the barrier for AI-driven impersonation, while not foolproof, deters malicious actors, who must invest significant resources to create credible fake identities.

For anons unwilling to trust their private information to one of the major online platforms, third-party identity verifiers dedicated to protecting user privacy could carefully validate their identities while keeping them anonymous from social media companies. Such third-party brokers themselves would have their prestige checked by the accuracy of their verification procedures. This method would still allow for a high degree of public anonymity, bolstered by a backend that guarantees authenticity.

A new internet age

In the future, pure online anonymity will not be banned — nor should it be. But in the coming age of radical transparency, a truly anonymous account — one whose owner’s real-world identity is neither known within i own trusted circles nor verified by a reliable third party — will have little to no value.

The next internet age will value not just what you say, but more importantly, that others know you are the one who is saying it.

Editor’s note: A version of this article appeared originally in The American Mind.

The Department of Defense has allocated millions of dollars to create phony social media profiles the government could turn against Americans.

The Department of Defense has allocated millions of dollars to create phony social media profiles the government could turn against Americans.