Your kids' iPhones may be the most dangerous things they own

What’s an acceptable level of online child sexual abuse, blackmail, and sextortion? How many teen suicides must happen before someone acts? Most parents would say the answer is obvious: zero.

Apple doesn’t seem to agree. Despite serving as the constant digital companion for millions of American kids, the company has done nothing to rein in the iMessage app — a tool that now functions as an unregulated playground for child predators. Apple has shrugged off the problem while iMessage becomes the wild west of child exploitation: unchecked, unreported, and deadly.

It’s long past time for Apple to confront the truth: Its inaction empowers predators. And that makes the company complicit and accountable.

You wouldn’t leave a toddler alone by the pool. You wouldn’t hand your 9-year-old the keys to a pickup. And when you drive that truck, you don’t let your kid ride on the hood. But every day, parents hand their children a device that could be just as dangerous: the iPhone.

That device follows them everywhere — to school, to bed, into the darkest corners of the internet. The threat doesn’t just come from YouTube or TikTok. It’s baked into iMessage itself — the default communication tool on every iPhone, the one parents use to text their kids.

Unlike social media platforms or games, iMessage gives parents almost no tools to limit its use or increase safety. No meaningful restrictions. No guardrails. No accountability.

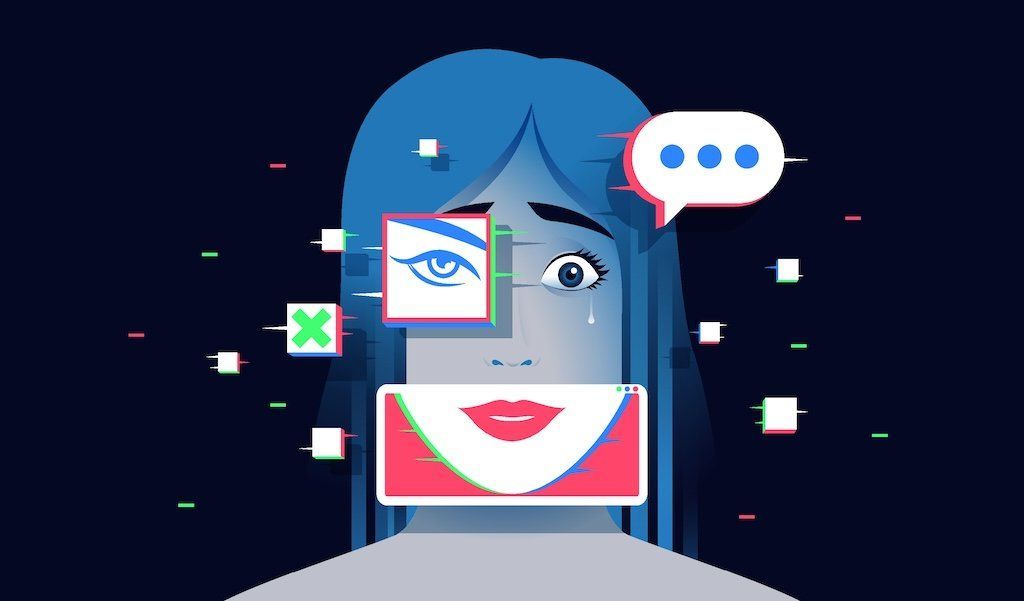

Criminals understand this — and they take full advantage. They generate fake nude images of boys and send them via iMessage. Then, they threaten to release the images to the victims’ classmates and followers unless they pay up. It’s extortion. It’s emotional torture. And it often ends in tragedy.

This isn’t rare. It’s growing. Online child-sexual abuse and interaction are spreading fast — and Apple refuses to act.

The statistics are outrageous:

- More than one in five children ages 9 to 12have reported some type of sexual interaction online with someone they believed to be an adult;

- More than half (54%) of 18- to 20-year-olds experienced “online sexual harms during childhood”;

- There has been an 80% increase in online grooming crimes in just the past four years;

- In 2024, the public reported more than 5,000 sextortion cases — and 34% involved iMessage.

- In just the first three months of this year, 38% of the more than 2,000 reported cases included sextortion attempts via iMessage.

Why do predators prefer iMessage over apps like WhatsApp or Snapchat? According to law enforcement and online safety experts, iMessage offers “an appealing venue” for grooming — a place where predators can build trust with your child. They identify victims on public platforms, then move the conversation to iMessage, where no safety guardrails exist.

RELATED: Is your child being exposed to pedophiles in the metaverse?

And children trust it. That familiar blue bubble? Apple teaches them it means the message came from a “trusted source.” Not just another text — another iPhone.

Apple claims to offer a “communication safety” feature that blurs nude images sent to kids through iMessage. But here’s the catch: The alert lets the child view the image anyway. That’s not a safety feature. That’s a fig leaf.

Apple knows exactly what iMessage enables — a criminal playground for sextortion, child sexual abuse, and worse. But Apple doesn’t act. Why? Because it doesn’t have to. The company sees no urgent economic risk. Today, 88% of American teens own iPhones. This fall, 25% are expected to upgrade to iPhone 17 — up from 22% last year.

The numbers tell the rest of the story.

In 2024, the National Center for Missing and Exploited Children identified more than 20 million cases of suspected online child sexual exploitation — much of it sextortion. Instagram reported 3.3 million. WhatsApp logged more than 1.8 million. Snapchat topped 1.1 million.

Apple reported 250.

No level of child sexual exploitation is acceptable. Not one instance. Content providers and app developers across the industry have taken steps to protect children. Apple, by contrast, has shrugged. Its silence is willful. Its inaction is a choice.

It’s long past time for Apple to confront the truth: Its inaction empowers predators. And that makes the company complicit and accountable — economically, legally, and morally.

Photo by Kayla Bartkowski/Getty Images

Photo by Kayla Bartkowski/Getty Images Photo by JIM WATSON/AFP via Getty Images

Photo by JIM WATSON/AFP via Getty Images Lawmakers should include, at a minimum, these three guiding principles in a national framework bill for AI.

Lawmakers should include, at a minimum, these three guiding principles in a national framework bill for AI.

Ohio has introduced a bill to protect kids from online porn and 'deepfakes.' The porn industry has this effort in its crosshairs.

Ohio has introduced a bill to protect kids from online porn and 'deepfakes.' The porn industry has this effort in its crosshairs. The decision is a significant victory for the First Amendment, which has been under constant assault from leftists like the California governor.

The decision is a significant victory for the First Amendment, which has been under constant assault from leftists like the California governor.

Washington tends to ignore problems that harm the little guy, but something might get done now that someone with power and influence has been affected.

Washington tends to ignore problems that harm the little guy, but something might get done now that someone with power and influence has been affected. If artificial intelligence is to be integrated into our society, we have to prevent things like deepfake pornography.

If artificial intelligence is to be integrated into our society, we have to prevent things like deepfake pornography.