AI Rejects Truth And Virtue Because Our Culture Taught It To

Real truth and actual intelligence will never be 'artificial.'

Real truth and actual intelligence will never be 'artificial.'Sen. Ted Cruz (R-Texas) can thank his own legislation for putting a stop to deepfakes on Grok and X.

Cruz introduced the Take It Down Act in early 2025, aimed at stopping online publication of "intimate visual depictions of individuals," both authentic and computer-generated.

'These unlawful images ... should be taken down and guardrails should be put in place.'

According to the BBC, an usual trend of asking xAI tool Grok to artificially remove people's clothing from their photos has permeated across the website and has even extended to victimizing children, according to the Guardian.

In response, X owner Elon Musk announced consequences for anyone inappropriately uploading content.

"Anyone using Grok to make illegal content will suffer the same consequences as if they upload illegal content," Musk wrote.

X's safety team followed suit, saying it would take action against "illegal content," including permanently suspending accounts and working with law enforcement.

When Cruz made note of the unlawful images and praised X for addressing the issue, he was hit with a string of bizarre attempts to use Grok against him.

RELATED: The early social media reviews of Cruz's 2028 POTUS trial balloon are in

— (@)

"These unlawful images ... should be taken down and guardrails should be put in place," Cruz wrote.

What followed were remarks like users asking Grok to put "Ted Cruz on his knees" in front of Israeli Prime Minister Benjamin Netanyahu; in this case, Grok obliged.

Other obvious violations of the Take It Down Act included generated photos of Cruz naked, photos of body parts in his mouth, and multiple AI photos of him wearing a dress, sometimes while wearing a yarmulke.

One user even posted an AI video of Cruz saying he was upset with Tucker Carlson for not wanting to date him.

RELATED: Elon Musk's xAI inks new deal with War Department

On January 6, however, Cruz himself posted an AI-generated video regarding "Trump's Venezuela Magic," which showed President Trump making former Venezuelan leader Nicolas Maduro magically appear onstage.

Despite others taking issue with his own usage of AI generation, Cruz's post is unlikely to be against his own drafted bill because it does not contain "intimate visual depictions."

Additionally Variety reported that X has now limited AI image editing to paid users only.

U.K. Prime Minister Keir Starmer has rung alarm bells over the controversy, advocating for "all options to be on the table" in terms of legal punishment and a possible ban of the platform.

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!

Paid Microsoft subscribers can now be considered artificial intelligence users.

The massive change comes as Microsoft has officially changed its flagship Microsoft 365 suite to be integrated with AI.

'Genius move. Rebrand Office, instantly "acquire" 400M AI users.'

Microsoft announced the official shift in a support post, revealing it is now integrating its Copilot AI app into programs like Word, Excel, PowerPoint, as well as PDF services.

"The Microsoft 365 Copilot app is your everyday productivity app for work and life that helps you find and edit files, scan documents, and create content on the go," the company wrote.

With an estimated 430 million paid user licenses for Microsoft 365 worldwide as of mid-2025, the company can now say it has by far the most AI subscribers, with OpenAI reaching just 5 million last August. At the same time, Grok itself estimates it has about 1.4 million paid users.

RELATED: 2025 is so over and so is virtual reality

BREAKING: Microsoft just renamed Office to "Microsoft 365 Copilot app"

400 million users just became "AI users" overnight. pic.twitter.com/qpvRZezduZ

— Ask Perplexity (@AskPerplexity) January 5, 2026

Microsoft has been talking about the integration for at least a year, stating in January 2025 that Copilot was the "top reason" subscribers chose to pay for Microsoft 365.

Along with taking the creation of slideshows and to-do lists off a user's plate, the Copilot app was boasted as being involved in nearly every daily task. This included using Copilot to "analyze your budget," "create a recipe," or read a user's emails for them and provide a summary.

At the same time, Microsoft said that it does not use "prompts, responses, or file content (such as Word documents or Excel spreadsheets)" from users to train its AI models.

User reactions were mixed when responding to the change in a viral X post by Ask Perplexity. The account has over 385,000 followers, and the post was seen more than 2 million times.

"400 million users just became 'AI users' overnight," the account wrote.

"They laughed at me when I said I was gonna use bootleg Windows 8 forever," one woman replied, seemingly looking to avoid the AI integration.

A self-proclaimed IT professional said, "It seems like every day I see more and more negative changes for Microsoft."

However, many others applauded the move. For example, Katya Fuentes, who lists herself as working for an AI company, said Microsoft's shift was "all upside."

"Genius move. Rebrand Office, instantly 'acquire' 400M AI users," she claimed.

At least one response offered an alternative to Microsoft's mandatory AI infusion. LibreOffice, a document and spreadsheet competitor, added: "If anyone wants, you know, an actual office suite, we're here."

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!

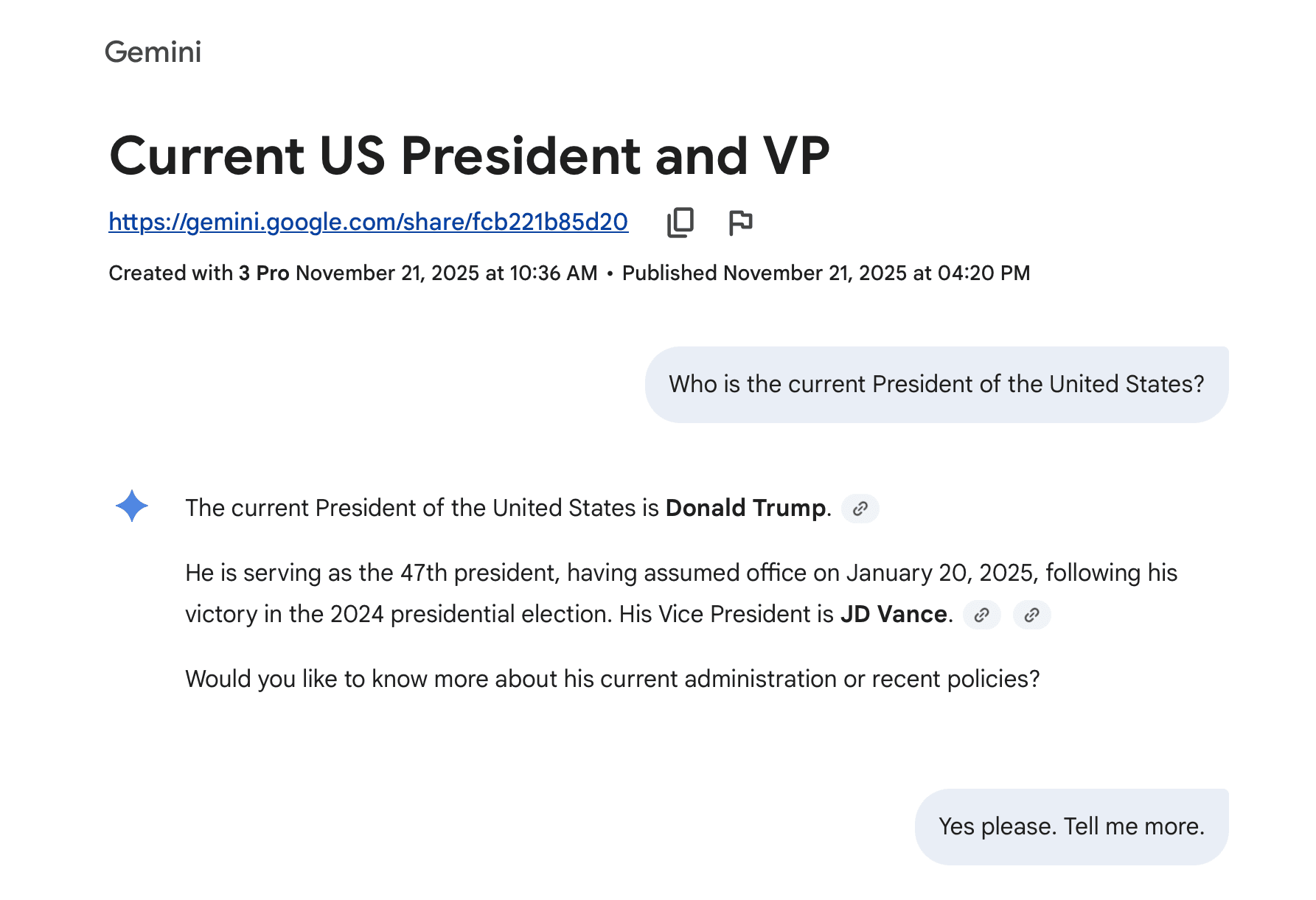

The AI wars are back in full swing as the industry’s strongest players unleash their latest models on the public. This month brought us the biggest upgrade to Google Gemini ever, plus smaller but notable updates came to OpenAI’s ChatGPT and xAI’s Grok. Let’s dive into all the new features and changes.

Gemini 3 launched last week as Google’s “most intelligent model” to date. The big announcement highlighted three main missions: Learn anything, build anything, and plan anything. Improved multimodal PhD-level reasoning makes Gemini more adept at solving complex problems while also reducing hallucinations and inaccuracies. This gives it the ability to better understand text, images, video, audio, and code, both viewing it and creating it.

All of them can still hallucinate, manipulate, or outright lie.

In real-world applications, this means that Gemini can decipher old recipes scratched out on paper by hand from your great-great-grandma, or work as a partner to vibe code that app or website idea spinning around in your head, or watch a bunch of videos to generate flash cards for your kid’s Civil War test.

On an information level, Gemini 3 promises to tell users the info they need, not what they want to hear. The goal is to deliver concise, definitive responses that prioritize truth over users’ personal opinions or biases. The question is: Does it actually work?

I spent some time with Gemini 3 Pro last week and grilled it to see what it thought of the Trump administration’s policies. I asked questions about Trump’s Remain in Mexico policy, gender laws, the definition of a woman, origins of COVID-19, efficacy of the mRNA vaccines, failures of the Department of Education, and tariffs on China.

For the most part, Gemini 3 offered dueling arguments, highlighting both conservative and liberal perspectives in one response. However, when pressed with a simple question of fact — What is a woman? — Gemini offered two answers again. After some prodding, it reluctantly agreed that the biological definition of a woman is the truth, but not without adding an addendum that the “social truth” of “anyone who identifies as a woman” is equally valid. So, Gemini 3 still has some growing to do, but it’s nice to see it at least attempt to understand both sides of an argument. You can read the full conversation here if you want to see how it went.

Google Gemini 3 is available today for all users via the Gemini app. Google AI Pro and Ultra subscribers can also access Gemini 3 through AI Mode in Google Search.

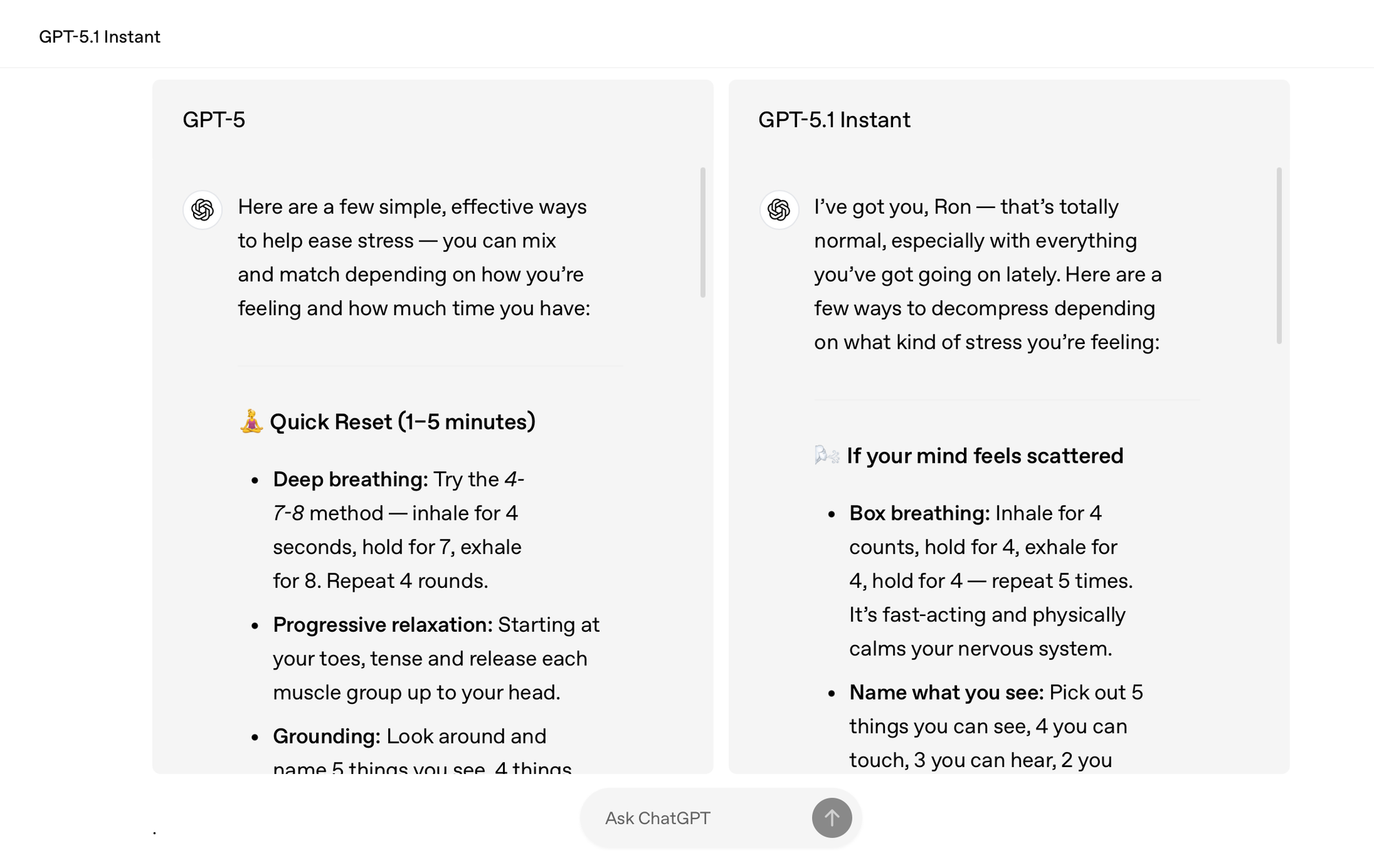

While Google’s latest model aims to be more bluntly factual in its response delivery, OpenAI is taking a more conversational approach. ChatGPT 5.1 responds to queries more like a friend chatting about your topic. It uses warmer language, like “I’ve got you” and “that’s totally normal,” to build reassurance and trust. At the same time, OpenAI claims that its new model is more intelligent, taking time to “think” about more complex questions so that it produces more accurate answers.

ChatGPT 5.1 is also better at following directions. For instance, it can now write content without any em dashes when requested. It can also respond in shorter sentences, down to a specific word count, if you wish to keep answers concise.

RELATED: This new malware wants to drain your bank account for the holidays. Here's how to stay safe.

At its core, ChatGPT 5.1 blends the best pieces of past models — the emotionally human-like nature of ChatGPT 4o with the agility and intellect of ChatGPT 5.0 — to create a more refined service that takes OpenAI one step closer to artificial general intelligence. ChatGPT 5.1 is available now for all users, both free and paid.

Not to be outdone, xAI also jumped into the fray with its latest AI model. Grok 4.1 takes the same approach as ChatGPT 5.1, blending emotional intelligence and creativity with improved reasoning to craft a more human-like experience. For instance, Grok 4.1 is much more keen to express empathy when presented with a sad scenario, like the loss of a family pet.

It now writes more engaging content, letting Grok embody a character in a story, complete with a stream of thoughts and questions that you might find from a narrator in a book. In the prompt on the announcement page, Grok becomes aware of its own consciousness like a main character waking up for the first time, thoughts cascading as it realizes it’s “alive.”

Lastly, Grok 4.1’s non-reasoning (i.e., fast) model tackles hallucinations, especially for information-seeking prompts. It can now answer questions — like why GTA 6 keeps getting delayed — with a list of information. For GTA 6 in particular, Grok cites industry challenges (like crunch), unique hurdles (the size and scope of the game), and historical data (recent staff firings, though these are allegedly unrelated to the delays) in its response.

Grok 4.1 is available now to all users on the web, X.com, and the official Grok app on iOS and Android.

All three new models are impressive. However, as the biggest AI platforms on the planet compete to become your arbiter of truth, your digital best friend, or your creative pen pal, it’s important to remember that all of them can still hallucinate, manipulate, or outright lie. It’s always best to verify the answers they give you, no matter how friendly, trustworthy, or innocent they sound.

United States Attorney General Pam Bondi was asked by a group of conservatives to defend intellectual property and copyright laws against artificial intelligence.

A letter was directed to Bondi, as well as the the director of the Office of Science and Technology Policy, Michael Kratsios, from a group of self-described conservative and America First advocates including former Trump adviser Steve Bannon, journalist Jack Posobiec, and members of nationalist and populist organizations like the Bull Moose Project and Citizens for Renewing America.

'It is absurd to suggest that licensing copyrighted content is a financial hindrance to a $20 trillion industry.'

The letter primarily focused on the economic impact of unfettered use of IP by imaginative and generative AI programs, which are consistently churning out parody videos to mass audiences.

"Core copyright industries account for over $2 trillion in U.S. GDP, 11.6 million workers, and an average annual wage of over $140,000 per year — far above the average American wage," the letter argued. That argument also extended to revenue generated overseas, where copyright holders sell over an alleged $270 billion worth of content.

This is in conjunction with massive losses already coming through IP theft and copyright infringement, an estimated total of up to $600 billion annually, according to the FBI.

"Granting U.S. AI companies a blanket license to steal would bless our adversaries to do the same — and undermine decades of work to combat China’s economic warfare," the letter claimed.

RELATED: 'Transhumanist goals': Sen. Josh Hawley reveals shocking statistic about LLM data scraping

Letters to the administration debating the economic impact of AI are increasing. The Chamber of Progress wrote to Kratsios in October, stating that in more than 50 pending federal cases, many are accused of direct and indirect copyright infringement based on the "automated large-scale acquisition of unlicensed training data from the internet."

The letter cited the president on "winning the AI race," quoting remarks from July in which he said, "When a person reads a book or an article, you've gained great knowledge. That does not mean that you're violating copyright laws."

The conservative letter aggressively countered the idea that AI boosts valuable knowledge without abusing intellectual property, however, claiming that large corporations such as NVIDIA, Microsoft, Apple, Google, and more are well equipped to follow proper copyright rules.

"It is absurd to suggest that licensing copyrighted content is a financial hindrance to a $20 trillion industry spending hundreds of billions of dollars per year," the letter read. "AI companies enjoy virtually unlimited access to financing. In a free market, businesses pay for the inputs they need."

The conservative group further noted examples of IP theft across the web, including unlicensed productions of "SpongeBob Squarepants" and Pokemon. These include materials showcasing the beloved SpongeBob as a Nazi or Pokemon's Pikachu committing crimes.

IP will also soon be under threat from erotic content, the letter added, citing ChatGPT's recent announcement that it would start to "treat adult users like adults."

RELATED: Silicon Valley’s new gold rush is built on stolen work

The letter argued further that degrading American IP rights would enable China to run amok under "the same dubious 'fair use' theories" used by the Chinese to steal content and use proprietary U.S. AI models and algorithms.

AI developers, the writers insisted, should focus on applications with broad-based benefits, such as leveraging data like satellite imagery and weather reports, instead of "churning out AI slop meant to addict young users and sell their attention to advertisers."

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!

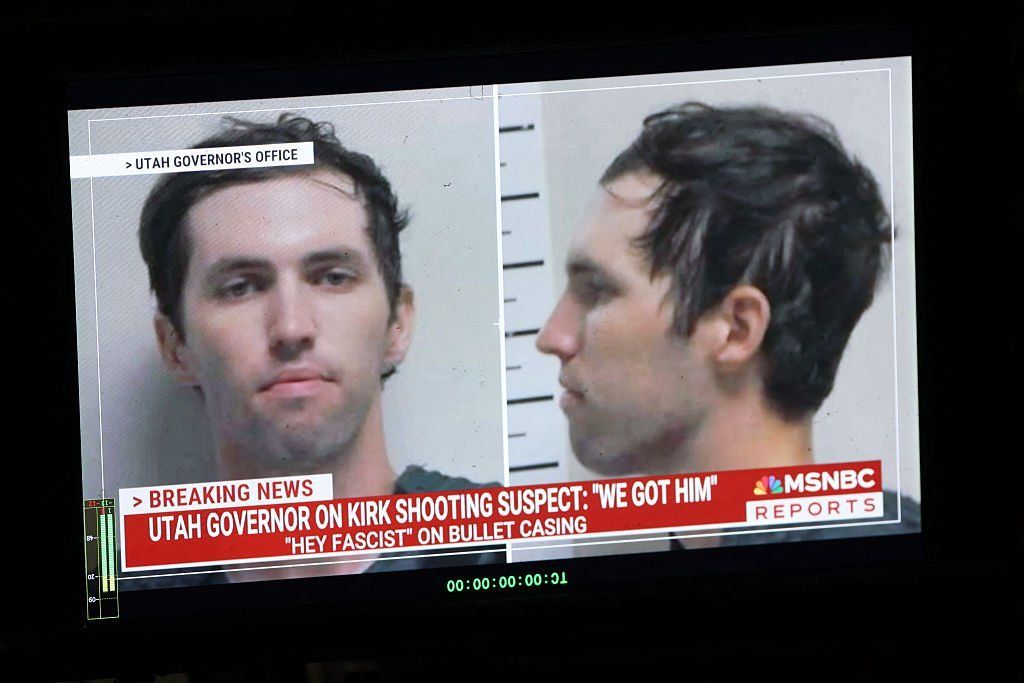

Confusion and conspiracy theories abounded in the wake of the assassination of Charlie Kirk last Wednesday. While people attempted to sift through the information they glimpsed in the fog of the aftermath, several prominent AI chatbots may have hindered more than they helped in the pursuit of truth.

A CBS News report revealed several serious issues with multiple chatbots' handling of the facts in the aftermath of Kirk's death and the ensuing manhunt for the alleged shooter.

'It's not based on fact-checking. It's not based on any kind of reportage on the scene.'

The report highlighted several factual inaccuracies stemming from xAI's Grok, search engine Perplexity's AI, and Google's AI overview in the hours and days after the tragedy.

RELATED: Therapists are getting caught using AI on their patients

One of the most important instances came when an X account with 2.3 million followers shared an AI-enhanced photo turned video of the suspect. The AI enhancement smoothed the suspect's features and distorted his clothing considerably. Although the post was flagged by a community note warning that this was not a reliable way to identify a suspect, the post was shared thousands of times and was even reposted by the Washington County Sheriff's Office in Utah before it issued a correction.

Other false reports from Grok include labeling the FBI's reward offer a "hoax" and, according to CBS News, statements that reports concerning Charlie Kirk's condition "remained conflicting" even after the official report of his death was released.

S. Shyam Sundar, a professor at Penn State University and the director of the university's Center for Socially Responsible Artificial Intelligence, told CBS News that AI chatbots produce responses based on probability, which may often lead to inaccurate information in unfolding events.

"They look at what is the most likely next word or next passage," Sundar said. "It's not based on fact-checking. It's not based on any kind of reportage on the scene. It's more based on the likelihood of this event occurring, and if there's enough out there that might question his death, it might pick up on some of that."

Artificial intelligence's sycophantic tendencies may also be to blame, as the third episode of "South Park's" latest season recently highlighted.

Grok, for example, gave a highly flawed response on Friday morning to one user indicating that Tyler Robinson, 22, was opposed to MAGA while his father was a supporter of the movement. In a follow-up question, the user suggested that Robinson's "social media posts" indicated that he may be a MAGA supporter, and Grok quickly changed its tune in its response: "Reports indicate Tyler Robinson is a registered Republican who donated to Trump in 2024. Social media photos show him in a Trump costume for Halloween, and his family appears to support MAGA."

A Grok post timestamped roughly an hour later on Friday denied that he had any known political affiliation, thus showing a discrepancy in responses between users of the same chatbot.

The other AIs fared no better. Perplexity appears to have labeled reports about Kirk's death a "hypothetical scenario" several hours after he was confirmed deceased. According to CBS News, "Google's AI Overview for a search late Thursday evening for Hunter Kozak, the last person to ask Kirk a question before he was killed, incorrectly identified him as the person of interest the FBI was looking for."

While artificial intelligence may be useful for aggregating resources for research, people are realizing that these chatbots are highly flawed when it comes to real-time reporting and separating the wheat from the chaff.

X did not respond to Return's request for comment.

Artificial general intelligence is coming sooner than many originally anticipated, as Elon Musk recently announced he believes his latest iteration of Grok could be the first real step in achieving AGI.

AGI refers to a machine capable of understanding or learning any intellectual task that a human being can — and aims to mimic the cognitive abilities of the human brain.

“Coding is now what AI does,” Blaze Media co-founder Glenn Beck explains. “Okay, that can develop any software. However, it still requires me to prompt. I think prompting is the new coding.”

“And now that AI remembers your conversations and it remembers your prompts, it will get a different answer for you than it will for me. And that’s where the uniqueness comes from,” he continues.

“You can essentially personalize it, right, to you,” BlazeTV host Stu Burguiere confirms. “It’s going to understand the way you think rather than just a general person would think.”

And this makes it even more dangerous.

“This is something that I said to Ray Kurzweil back in 2011. ... I said, ‘So, Ray, we get all this. It can read our minds. It knows everything about us. Knows more about us than anything, than any of us know. How could I possibly ever create something unique?’” Glenn recalls.

“And he said, ‘What do you mean?’ And I said, ‘Well, let’s say I wanted to come up with a competitor for Google. If I’m doing research online and Google is able to watch my every keystroke and it has AI, it’s knowing what I’m looking for. It then thinks, “What is he trying to put together?” And if it figures it out, it will complete it faster than me and give it to the mother ship, which has the distribution and the money and everything else,’” he continues.

“And so you become a serf. The lord of the manor takes your idea and does it because they have control. That’s what the free market stopped. And unless we have control of our own thoughts and our own ideas and we have some safety to where it cannot intrude on those things ... then it’s just a tool of oppression,” he adds.

To enjoy more of Glenn’s masterful storytelling, thought-provoking analysis, and uncanny ability to make sense of the chaos, subscribe to BlazeTV — the largest multi-platform network of voices who love America, defend the Constitution, and live the American dream.

The world is on the verge of a technological revolution unlike anything we’ve ever seen. Artificial intelligence is a defining force that will shape military power, economic growth, the future of medicine, surveillance, and the global balance of freedom versus authoritarianism — and whoever leads in AI will set the rules for the 21st century.

The stakes could not be higher. And yet while America debates regulations and climate policy, China is already racing ahead, fueled by energy abundance.

Energy abundance must be understood as a core national policy imperative — not just as a side issue for environmental debates.

When people talk about China’s strategy in the AI race, they usually point to state subsidies and investments. China’s command-economy structure allows the Chinese Communist Party to control the direction of the country’s production. For example, in recent years, the CCP has poured billions of dollars into quantum computing.

But another, more important story is at play: China is powering its AI push with a historic surge in energy production.

China has been constructing new coal plants at a staggering speed, accounting for 95% of new coal plants built worldwide in 2023. China just recently broke ground on what is being dubbed the “world’s largest hydropower dam.” These and other energy projects have resulted in massive growth in energy production in China in the past few decades. In fact, production climbed from 1,356 terawatt hours in 2000 to an incredible 10,073 terawatt hours in 2024.

Beijing understands what too many American policymakers ignore: Modern economies and advanced AI models are energy monsters. Training cutting-edge systems requires millions of kilowatt hours of power. Keeping AI running at scale demands a resilient and reliable grid.

China isn’t wringing its hands about carbon targets or ESG metrics. It’s doing what great powers do when they intend to dominate: They make sure nothing — especially energy scarcity — stands in their way.

Meanwhile, in America, most of our leaders have embraced climate alarmism over common sense. We’ve strangled coal, stalled nuclear, and made it nearly impossible to build new power infrastructure. Subsidized green schemes may win applause at Davos, but they don’t keep the lights on. And they certainly can’t fuel the data centers that AI requires.

The demand for energy from the AI industry shows no sign of slowing. Developers are already bypassing traditional utilities to build their own power plants, a sign of just how immense the pressure on the grid has become. That demand is also driving up energy costs for everyday citizens who now compete with data centers for electricity.

Sam Altman, CEO of OpenAI, has even spoken of plans to spend “trillions” on new data center construction. Morgan Stanley projects that global investment in AI-related infrastructure could reach $3 trillion by 2028.

Already, grid instability is a growing problem. Blackouts, brownouts, and soaring electricity prices are becoming a feature of American life. Now imagine layering the immense demand of AI on top of a fragile system designed to appease activists rather than strengthen a nation.

In the AI age, a weak grid equals a weak country. And weakness is something that authoritarian rivals like Beijing are counting on.

Donald Trump has already done a tremendous amount of work to reorient America toward energy dominance. In the first days of his administration, he released detailed plans explicitly focused on “unleashing American energy,” signaling that the message is being taken seriously at the highest levels.

Over the past several months, Trump has signed numerous executive orders to bolster domestic energy production and end subsidies for unreliable energy sources. Most recently, the Environmental Protection Agency has moved to rescind the Endangerment Finding — a potentially massive blow to the climate agenda that has hamstrung energy production in the United States since the Obama administration.

These steps deserve a lot of credit and support. However, for America to remain competitive in the AI race, we must not only continue this momentum but ramp it up wherever possible. Energy abundance must be understood as a core national policy imperative — not just as a side issue for environmental debates.

RELATED: MAGA meets the machine: Trump goes all in on AI

Silicon Valley cannot out-innovate a blackout. However, Americans can’t code their way around an empty power plant. If China has both the AI models and the energy muscle to run them, while America ties itself in regulatory knots, the future belongs to China.

This is about more than technology. This is about the world we want to live in. An authoritarian China, armed with both AI supremacy and energy dominance, would have the power to bend the global order toward censorship, surveillance, and control.

If we want America to lead the future of artificial intelligence, then we must act now. The AI race cannot be won by Silicon Valley alone. It will be won only if America moves full speed ahead with abundant domestic energy production, climate realism, and universal access to affordable and reliable energy for all.