![]()

Suddenly, artificial intelligence is everywhere — generating art, writing essays, analyzing medical data. It’s flooding newsfeeds, powering apps, and slipping into everyday life. And yet, despite all the buzz, far too many Americans — especially conservatives — still treat AI like a novelty, a passing tech fad, or a toy for Silicon Valley elites.

Treating AI like the latest pet rock tech trend is not only naïve — it’s dangerous.

The AI shift is happening now, and it’s coming for white-collar jobs that once seemed untouchable.

AI isn’t just another innovation like email, smartphones, or social media. It has the potential to restructure society itself — including how we work, what we believe, and even who gets to speak — and it’s doing it at a speed we’ve never seen before.

The stakes are enormous. The pace is breakneck. And still, far too many people are asleep at the wheel.

AI isn’t just ‘another tool’

We’ve heard it a hundred times: “Every generation freaks out about new technology.” The Luddites smashed looms. People said cars would ruin cities. Parents panicked over television and video games. These remarks are intended to dismiss genuine concerns of emerging technology as irrational fears.

But AI is not just a faster loom or a fancier phone — it’s something entirely different. It’s not just doing tasks faster; it’s replacing the need for human thought in critical areas. AI systems can now write news articles, craft legal briefs, diagnose medical issues, and generate code — simultaneously, at scale, around the clock.

And unlike past tech milestones, AI is advancing at an exponential speed. Just compare ChatGPT’s leap from version 3 to 4 in less than a year — or how DeepSeek and Claude now outperform humans on elite exams. The regulatory, cultural, and ethical guardrails simply can’t keep up. We’re not riding the wave of progress — we’re getting swept underneath it.

AI is shockingly intelligent already

Skeptics like to say AI is just a glorified autocomplete engine — a chatbot guessing the next word in a sentence. But that’s like calling a rocket “just a fuel tank with fire.” It misses the point.

The truth is, modern AI already rivals — and often exceeds — human performance in several specific domains. Systems like OpenAI’s GPT-4, Anthropic's Claude, and Google's Gemini demonstrate IQs that place them well above average human intelligence, according to ongoing tests from organizations like Tracking AI. And these systems improve with every iteration, often learning faster than we can predict or regulate.

Even if AI never becomes “sentient,” it doesn’t have to. Its current form is already capable of replacing jobs, overseeing supply chain logistics, and even shaping culture.

AI will disrupt society — fast

Some compare the unfolding age of AI as just another society-improving invention and innovation: Jobs will be lost, others will be created — and we’ll all adapt. But those previous transformations took decades to unfold. The car took nearly 50 years to become ubiquitous. The internet needed about 25 years to transform communication and commerce. These shifts, though massive, were gradual enough to give society time to adapt and respond.

AI is not affording us that luxury. The AI shift is happening now, and it’s coming for white-collar jobs that once seemed untouchable.

Reports published by the World Economic Forum and Goldman Sachs suggest job disruption to hundreds of millions globally in the next several years. Not factory jobs — rather, knowledge work. AI already edits videos, writes advertising copy, designs graphics, and manages customer service.

This isn’t about horses and buggies. This is about entire industries shedding their human workforces in months, not years. Journalism, education, finance, and law are all in the crosshairs. And if we don’t confront this disruption now, we’ll be left scrambling when the disruption hits our own communities.

AI will become inescapable

You may think AI doesn’t affect you. Maybe you never plan on using it to write emails or generate art. But you won’t stay disconnected from it for long. AI will soon be baked into everything.

Your phone, your bank, your doctor, your child’s education — all will rely on AI. Personal AI assistants will become standard, just like Google Maps and Siri. Policymakers will use AI to draft and analyze legislation. Doctors will use AI to diagnose ailments and prescribe treatment. Teachers will use AI to develop lesson plans (if all these examples aren't happening already). Algorithms will increasingly dictate what media you consume, what news stories you see, even what products you buy.

We went from dial-up to internet dependency in less than 15 years. We’ll be just as dependent on AI in less than half that time. And once that dependency sets in, turning back becomes nearly impossible.

AI will be manipulated

Some still think of AI as a neutral calculator. Just give it the data, and it’ll give you the truth. But AI doesn’t run on math alone — it runs on values, and programmers, corporations, and governments set those values.

Google’s Gemini model was caught rewriting history to fit progressive narratives — generating images of black Nazis and erasing white historical figures in an overcorrection for the sake of “diversity.” China’s DeepSeek AI refuses to acknowledge the Tiananmen Square massacre or the Uyghur genocide, parroting Chinese Communist Party talking points by design.

Imagine AI tools with political bias embedded in your child’s tutor, your news aggregator, or your doctor’s medical assistant. Imagine relying on a system that subtly steers you toward certain beliefs — not by banning ideas but by never letting you see them in the first place.

We’ve seen what happened when environmental social governance and diversity, equity, and inclusion transformed how corporations operated — prioritizing subjective political agendas over the demands of consumers. Now, imagine those same ideological filters hardcoded into the very infrastructure that powers our society of the near future. Our society could become dependent on a system designed to coerce each of us without knowing it’s happening.

Our liberty problem

AI is not just a technological challenge. It’s a cultural, economic, and moral one. It’s about who controls what you see, what you’re allowed to say, and how you live your life. If conservatives don’t get serious about AI now — before it becomes genuinely ubiquitous — we may lose the ability to shape the future at all.

This is not about banning AI or halting progress. It’s about ensuring that as this technology transforms the world, it doesn’t quietly erase our freedom along the way. Conservatives cannot afford to sit back and dismiss these technological developments. We need to be active participants in shaping AI’s ethical and political boundaries, ensuring that liberty, transparency, and individual autonomy are protected at every stage of this transformation.

The stakes are clear. The timeline is short. And the time to make our voices heard is right now.

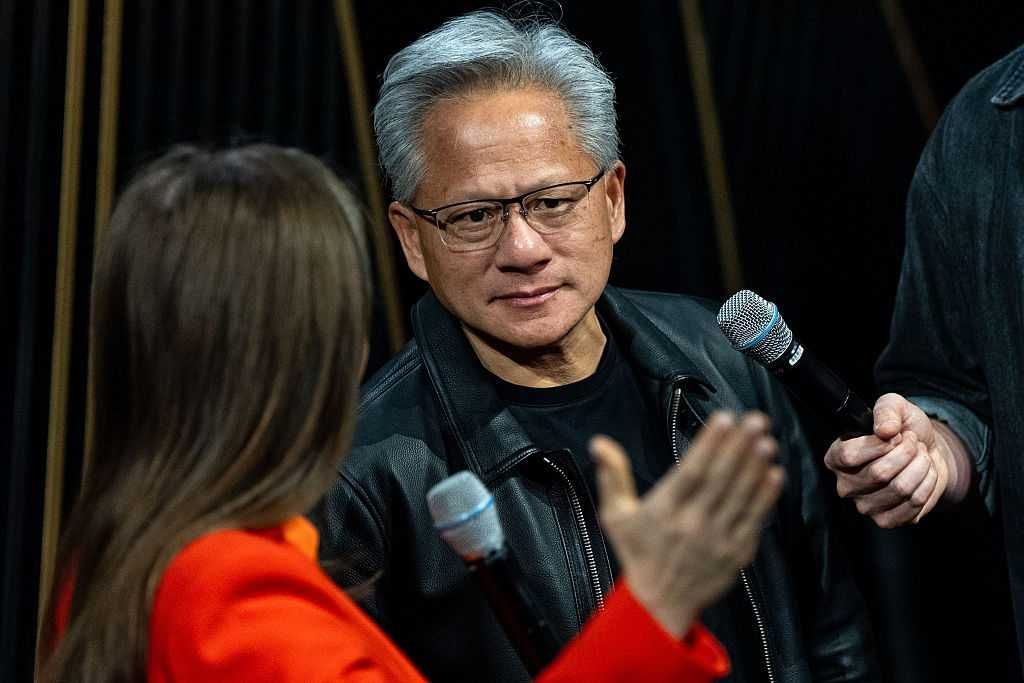

Photo by Alex Wong/Getty Images

Photo by Alex Wong/Getty Images

As Trump's polling lead continues, the left's case of 'Biden-copium' grows and grows

Despite Joe Biden’s horrific stance in the polls, constant stream of senile gaffes, and inability to address any emergency — let alone the American people, properly — the left still goes to bat for him.

Host of "Stu Does America," Stu Burguiere, calls it the left's “Biden-copium,” and a recent New York Times op-ed by Ezra Klein illustrates his phrase perfectly.

The article details a series of different liberal theories as to why Joe Biden is losing and what he should do about it. While Klein is a liberal himself, Stu finds himself agreeing with his opinions on these liberal theories.

Theory number one is that “the polls are wrong,” which Klein says is wrong not because polls aren’t wrong but because they’re biased.

“To the extent polls have been wrong in recent presidential elections, they’ve been wrong because they’ve been biased toward Democrats. Trump ran stronger in 2016 and 2020 than polls predicted. Sure, the polls could be wrong. But that could mean Trump is stronger, not weaker, than he looks,” Klein writes.

“This is totally accurate,” Stu comments. “The polls have been, generally speaking, relatively accurate, and I say those words specifically because what they are not is accurate. They are never accurate. They’re not accurate because they aren’t designed to tell us exactly what we want to know.”

Stu doesn’t find the next theory agreeable at all — which is essentially that the media is being too kind to Donald Trump.

“I don’t think it’s the mainstream media’s fault if you’re worried about Joe Biden winning. They’re doing everything they can to make this happen. The question really is: Will it be enough right now?” Stu says.

Stu believes the most “idiotic of all” of the theories is theory number three: “It’s a bad time to be an incumbent.”

“Polls are not showing an anti-incumbent mood. They’re showing an anti-Biden mood,” Klein writes.

“Yeah, look. The incumbency is your most powerful weapon. The only reason this is close at all is because Joe Biden is an incumbent,” Stu says.