Roomba maker iRobot files for bankruptcy, putting it in Chinese hands

Autonomous vacuums could go extinct unless they are made in the United States.

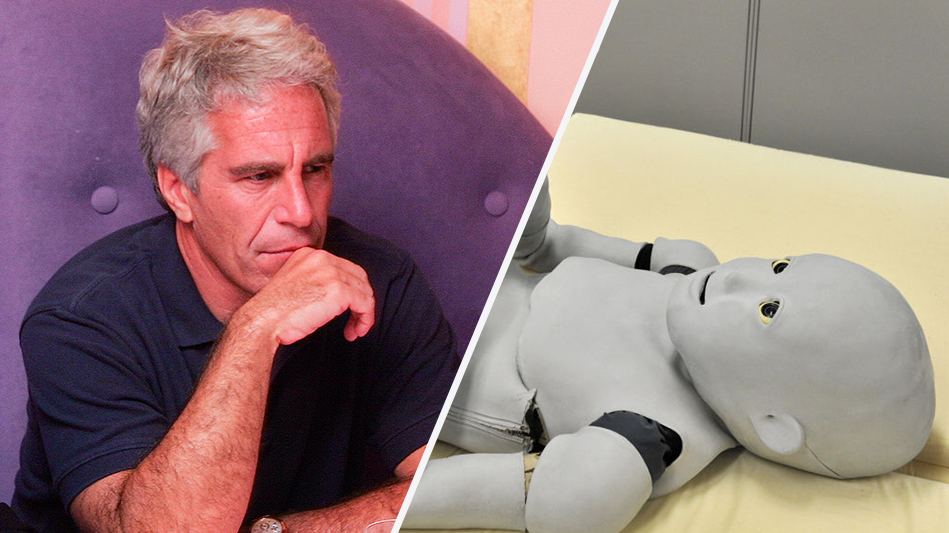

This is the harsh reality affecting companies like iRobot, the creator of Roomba, which just filed bankruptcy.

'... with no anticipated disruption to its app functionality.'

Despite the company generating over $680 million in 2024, iRobot has been crippled by U.S. tariffs. Due to a 46% import tariff on Vietnam, iRobot's costs were raised by $23 million in 2025, according to Reuters, which reviewed the court filings.

The court filings also reportedly noted that while Roomba is still dominating in U.S. and Japanese markets, it lost too much money on price reductions and investments in technological upgrades in order to maintain pace with its competitors.

According to the Verge, the company said it will continue to operate "with no anticipated disruption to its app functionality, customer programs, global partners, supply chain relationships, or ongoing product support."

Simply put, after more than 20 years on the market, the Roomba is able to operate without online connectivity.

The bankruptcy will put iRobot under Chinese control moving forward, with the manufacturing company that controls its debt.

RELATED: The ultimate Return guide to escaping the surveillance state

Court documents reportedly showed that Picea, a Chinese manufacturer, purchased iRobot while taking its debt on board, which is estimated to be about $190 million. The vacuum company took on the debt in 2023 to refinance its operations, Reuters claimed.

The debt came even after Amazon paid a $94 million termination fee after backing out of a $1.7 billion acquisition deal in 2024, according to the New York Times.

It has not been that long since iRobot had a massive market value at $3.56 billion in 2021; it is now estimated to be worth just $140 million.

New owners Picea will take 100% ownership of the company and cancel the $190 million in debt, while also canceling a $74 million debt that iRobot owed through a manufacturing agreement.

RELATED: The AI takeover isn't coming — it's already here

Not only did iRobot need to deal with Vietnamese tariffs, other manufacturing that was established in Malaysia in 2019 was also likely affected.

It was not announced that Roomba had cut manufacturing from the country, and if it remained, would likely have been subjected to a 24% tariff rate from the Trump administration, which included taxing machinery and electronics.

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!

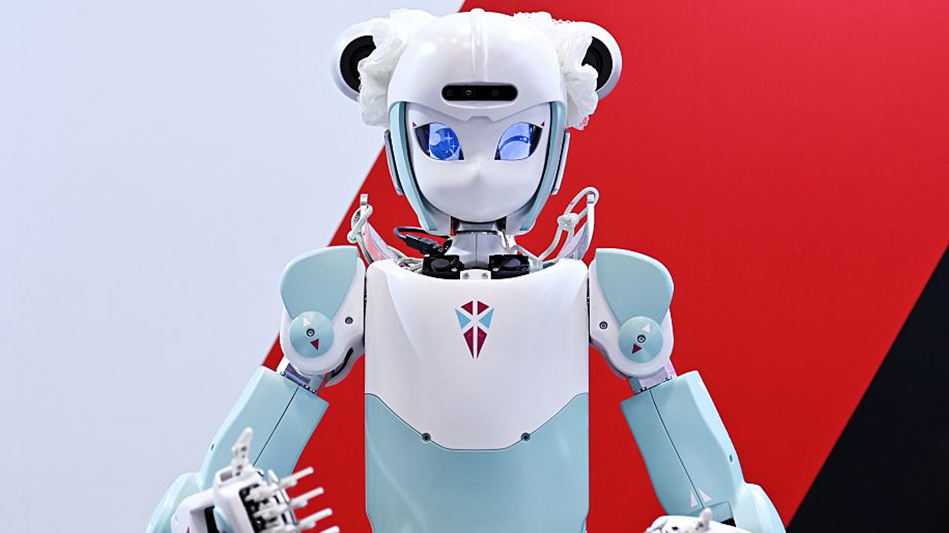

Photo by David Mareuil/Anadolu via Getty Images

Photo by David Mareuil/Anadolu via Getty Images Photo by David Mareuil/Anadolu via Getty Images

Photo by David Mareuil/Anadolu via Getty Images

mikkelwilliam via iStock/Getty Images

mikkelwilliam via iStock/Getty Images

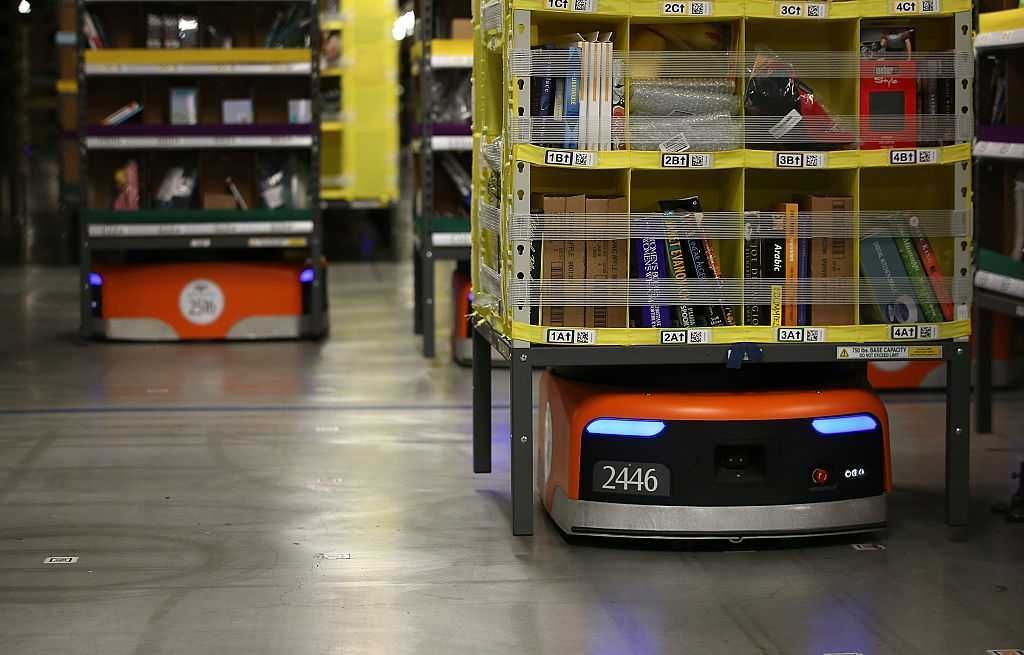

Photo by Paul Hennessy/NurPhoto via Getty Images

Photo by Paul Hennessy/NurPhoto via Getty Images Photo by Justin Sullivan/Getty Images

Photo by Justin Sullivan/Getty Images

A robot prepares to pick up a tote containing product during the first public tour of the newest Amazon Robotics fulfillment center on April 12, 2019, in Orlando, Florida. (Photo by Paul Hennessy/NurPhoto via Getty Images)

A robot prepares to pick up a tote containing product during the first public tour of the newest Amazon Robotics fulfillment center on April 12, 2019, in Orlando, Florida. (Photo by Paul Hennessy/NurPhoto via Getty Images) Photo by Joan Cros/NurPhoto via Getty Images

Photo by Joan Cros/NurPhoto via Getty Images

The Media Lab on the Massachusetts Institute of Technology (MIT) campus in Cambridge, Massachusetts, 2023. Simon Simard/Bloomberg via Getty Images

The Media Lab on the Massachusetts Institute of Technology (MIT) campus in Cambridge, Massachusetts, 2023. Simon Simard/Bloomberg via Getty Images